This is an archived version of the 2023/2024 run of the course. See the current version here.

NPFL099 – Statistical Dialogue Systems

About

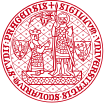

This course presents advanced problems and current state-of-the-art in the field of dialogue systems, voice assistants, and conversational systems (chatbots). After a brief introduction into the topic, the course will focus mainly on the application of machine learning – especially deep learning/neural networks – in the individual components of the traditional dialogue system architecture as well as in end-to-end approaches (joining multiple components together).

This course is a follow-up to the course NPFL123 Dialogue Systems, but can be taken independently – important basics will be repeated. All required deep learning concepts will be explained, but only briefly, so some machine learning background is recommended.

Logistics

Language

The course will be taught in English, but we're happy to explain in Czech, too.

Time & Place

Lectures and labs take place in the room S10 (Malá Strana, 1st floor).

- Lectures: Tue 10:40

- Labs: Tue 9:50 (every other week, starts on 10 October)

In practice, we'll start with the lectures on 9:50 and continue with the labs afterwards on even weeks. The labs will be likely shorter than 45 mins, as they mainly consist of homework assignments.

In addition, we plan to stream both lectures and lab instruction over Zoom and make the recordings available on Youtube (under a private link, on request). We'll do our best to provide a useful experience, just note that the quality might not be ideal.

- Zoom meeting ID: 953 7826 3918

- Password is the SIS code of this course (capitalized)

If you can't access Zoom, email us or text us on Slack.

There's also a Slack workspace you can use to discuss assignments and get news about the course. Please contact us by email if you want to join and haven't got an invite yet.

Passing the course

To pass this course, you will need to take an exam and do lab homeworks, which will amount to training an end-to-end neural dialogue system. See more details here. Note that the assignments will be the most challenging part of the course, and will take some time to complete.

Topics covered

- Brief machine learning basics

- neural networks architectures

- training techniques

- pretrained language models

- Brief introduction into dialogue systems

- dialogue systems applications

- basic components of dialogue systems

- knowledge representation in dialogue systems

- data and evaluation

- Language understanding (NLU)

- semantic representation of utterances

- statistical methods for NLU

- Dialogue management

- dialogue representation as a (Partially Observable) Markov Decision Process

- dialogue state tracking

- action selection

- reinforcement learning

- user simulation

- deep reinforcement learning (using neural networks)

- Response generation (NLG)

- introduction to NLG, basic methods (templates)

- generation using neural networks

- End-to-end dialogue systems (one network to handle everything)

- sequence-to-sequence systems

- memory/attention-based systems

- pretrained language models

- Open-domain systems (chatbots)

- generative systems (RNN-based, Transformer, pretrained language models)

- information retrieval

- ensemble systems

- Multimodal systems

- component-based and end-to-end systems

- image classification

- visual dialogue

Lectures

PDFs with lecture slides will appear here shortly before each lecture (more details on each lecture are on a separate tab). You can also check out last year's lecture slides.

1. Introduction Slides Questions

2. Data & Evaluation Slides Dataset Exploration Questions

3. Neural Nets Basics Slides Questions

4. Training Neural Nets Slides MultiWOZ 2.2 Loader Questions

5. Natural Language Understanding Slides Questions

6. Dialogue Management (1) Slides Finetuning GPT-2 on MultiWOZ Questions

7. Dialogue Management (2) Slides Questions

8. Language Generation Slides MultiWOZ 2.2 DB + State Questions

9. End-to-end Models Slides Questions

10. Chatbots Slides Two-stage decoding Questions

11. Multimodal systems Slides Questions

12. Linguistics & Ethics Slides Evaluation & Consistency task Bonus 1: Training on DailyDialog Bonus 2: Report Questions

Literature

A list of recommended literature is on a separate tab.

Lectures

1. Introduction

- What are dialogue systems

- Common usage areas

- Task-oriented vs. non-task oriented systems

- Closed domain, multi-domain, open domain

- System vs. user initiative in dialogue

- Standard dialogue systems components

- Research forefront

- TTS audio examples: formant, concatenative, HMMs, neural

2. Data & Evaluation

10 October Slides Dataset Exploration Questions

- Types of dialogue datasets

- Dataset splits

- Intrinsic vs. extrinsic evaluation

- Objective vs. subjective evaluation

- Evaluation metrics for dialogue components

3. Neural Nets Basics

- machine learning as function approximation

- machine learning problems (classification, regression, structured prediction)

- input features (embeddings)

- network shapes -- feed forward, CNNs, RNNs, attention, Transformer

4. Training Neural Nets

24 October Slides MultiWOZ 2.2 Loader Questions

- supervised training: gradient descent, backpropagation, cost

- learning rate, schedules & optimizers

- self-supervised: autoencoding, language modelling

- unsupervised: GANs, clustering

- reinforcement learning (short intro)

5. Natural Language Understanding

- problems of NLU

- common meaning representations -- intents + slots

- delexicalization, simple approaches

- various neural approaches to NLU (network shapes & training tasks)

- joint intent & slot models

- pretrained models, less supervision

6. Dialogue Management (1)

7 November Slides Finetuning GPT-2 on MultiWOZ Questions

- dialogue state tracking & action selection/policy

- dialogue state, belief state

- static & dynamic trackers, various approaches

- introduction to policies

- reinforcement learning, user simulator

7. Dialogue Management (2)

- reinforcement learning, value function

- actor, critic, actor-critic

- on-policy & off-policy

- Deep Q Networks

- Policy gradient methods (REINFORCE, Actor-critic)

- learned rewards

- hierarchical RL

8. Language Generation

21 November Slides MultiWOZ 2.2 DB + State Questions

- template-based generation

- NLG with RNN/transformer, pointer network, pretrained LMs

- decoding approaches

- data treatment

- reranking, combination with NLU

- NLG with content planning

9. End-to-end Models

- pipeline vs. single model, supervised vs. RL training

- models based on joining components

- seq2seq-based approaches, with pretrained LMs

- latent action spaces

- soft DB lookups, memory networks

10. Chatbots

6 December Slides Two-stage decoding Questions

- rule-based, generative, retrieval chatbots

- problems with seq2seq models

- hybrid/ensemble chatbots

- Alexa Prize

11. Multimodal systems

- Modalities in dialogue

- Standard virtual agents, embodied systems

- Convolutional networks and transformers for vision

- Neural visual dialogue, visual question answering

12. Linguistics & Ethics

19 December Slides Evaluation & Consistency task Bonus 1: Training on DailyDialog Bonus 2: Report Questions

- dialogue phenomena: turn-taking, grounding, speech acts, conversational maxims

- prediction & alignment in dialogue

- ethical considerations of NLP systems

- robustness, bias, safety

- privacy

Homework Assignments

There will be 6 homework assignments + 2 bonuses, each for a maximum of 10 points. Please see details on grading and deadlines on a separate tab.

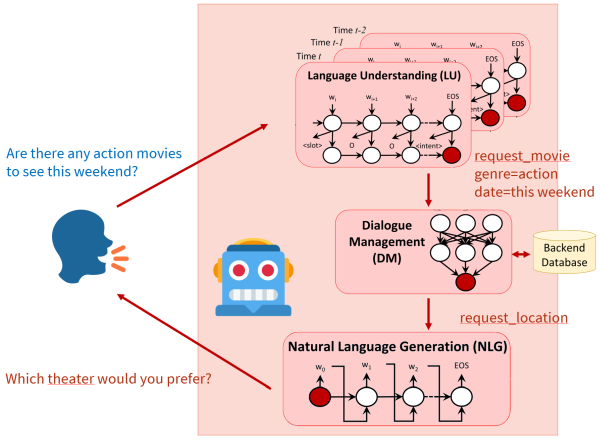

Assignments should be submitted via Git – see instructions on a separate tab.

All deadlines are 23:59:59 CET/CEST.

Note: If you don't have a faculty Gitlab account yet, please create one as soon as possible (see the instructions). Don't wait until the deadline! It takes 5 minutes, and if you don't do it, you won't have any way of submitting.

Index

3. Finetuning GPT-2 on MultiWOZ

6. Evaluation & Consistency task

7. Bonus 1: Training on DailyDialog

1. Dataset Exploration

Presented: 10 October, Deadline: 27 October

Task

Your task is to select one dialogue dataset, download and explore it.

- Find out (and mention in your report):

- The name of the dataset

- What kind of data it is (domain, modality)

- Where you downloaded it from (include the original URL)

- How it was collected

- What kind of dialogue system or dialogue system component it's designed for

- What kind of annotation is present (if any at all), how was it obtained (human/automatic)

- What format is it stored in

- What is the license

Here you can use the dataset description/paper that came out with the data. The papers are linked from the dataset webpages or from here. If you can't find a paper, ask us and we'll try to help.

- Measure (and enter into your report):

- Total data length (in terms of dialogues, turns, sentences, words; separately for user/system)

- Mean/std dev dialogue lengths (in terms of dialogues, turns, sentences, words; separately for user/system)

- Vocabulary size (separate for user/system)

- Shannon entropy over words in User/system turns (see slide 21 in Lecture 2)

Here you should use your own programming skills. If your dataset has a train/dev/test split, use the training set. If there's no clear separation between a user and a system (e.g. human-human chitchat data, or NLU-only data), provide just the overall numbers.

- Have a closer look at the data and try to make an impression -- does the data look natural? How difficult do you think this dataset will be to learn from? How usable will it be in an actual system? Do you think there's some kind of problem or limitation with the data? Write a short paragraph about this in your report.

Things to submit:

- A short summary detailing all of your findings (basic info, measurement, impressions) in Markdown as

hw1/README.md. - Your code for analyzing the data as

hw1/analysis.pyorhw1/analysis.ipynb.

See the submission instructions here (clone your Gitlab repo and add a new merge request).

Datasets to select from

Primarily one of these:

- DailyDialog (http://yanran.li/dailydialog.html)

- Schema-Guided Dialogue (https://github.com/google-research-datasets/dstc8-schema-guided-dialogue)

- CrossWOZ (https://github.com/thu-coai/CrossWOZ)

- Clinc-OOS (https://github.com/clinc/oos-eval)

- ConvBank (https://gitlab.com/ucdavisnlp/dialog-parsing)

- MetaLWOz/DSTC8 (https://www.microsoft.com/en-us/research/project/metalwoz/)

- PersonaChat (https://github.com/facebookresearch/ParlAI/tree/main/parlai/tasks/personachat, direct download link here, use the

train_none_originaldata) - Wizard of Wikipedia (https://parl.ai/projects/wizard_of_wikipedia/)

Others:

- AirDialogue (https://github.com/google/airdialogue)

- Edina Self-dialogue (https://github.com/jfainberg/self_dialogue_corpus)

- Empathetic Dialogues (https://github.com/facebookresearch/EmpatheticDialogues)

- FB Semantic Parsing for Dialogue (https://www.aclweb.org/anthology/D18-1300/, http://fb.me/semanticparsingdialog)

- FB multilingual (https://arxiv.org/pdf/1810.13327.pdf, https://fb.me/multilingual_task_oriented_data)

- Holl-E (https://github.com/nikitacs16/Holl-E)

- HWU NLU Evaluation data (https://github.com/xliuhw/NLU-Evaluation-Data)

- KG-Copy Data (https://github.com/SmartDataAnalytics/KG-Copy_Network, the

datasubdirectory) - MS Dialogue Challenge Data (https://github.com/xiul-msr/e2e_dialog_challenge)

- Mimic & Rephrase (https://www.aclweb.org/anthology/K19-1037, https://github.com/square/MimicAndRephrase/)

- PolyAI Task-specific – Banking (https://github.com/PolyAI-LDN/task-specific-datasets)

- PolyAI Task-specific – Span Extraction (https://github.com/PolyAI-LDN/task-specific-datasets)

- SPOLIN improv data (https://github.com/wise-east/spolin)

- Snips (https://github.com/snipsco/nlu-benchmark/tree/master/2017-06-custom-intent-engines)

- Taskmaster-1 (https://ai.google/tools/datasets/taskmaster-1)

- Taskmaster-2 (https://research.google/tools/datasets/taskmaster-2/)

- Topical Chat (https://github.com/alexa/Topical-Chat)

- Ubuntu Dialogue (https://github.com/rkadlec/ubuntu-ranking-dataset-creator)

Further links

Dataset surveys (broader, but shallower than what we're aiming at):

- https://breakend.github.io/DialogDatasets/

- https://github.com/AtmaHou/Task-Oriented-Dialogue-Dataset-Survey

2. MultiWOZ 2.2 Loader

Presented: 24 October, Deadline: 10 November

Task

Your task is to create a component that will load the task-oriented MultiWOZ 2.2 dataset and process the data so it is prepared for model training. The component will consist of two Python classes -- one to hold the data, and one to prepare the training batches.

In later assignments, you will train a GPT-2 based model (similar to SOLOIST) using the data provided by this loader. Note that this means that the next assignments depend on this one.

We prepared some code templates for you in diallama/mw_loader.py to guide your implementation. You should not need to modify the code already present in the templates. If you feel you need to, you can do so, but please comment on your code changes in the MR. Please do not modify the file hw2/test.py under any circumstances (contact us if you really think you need to).

The bits that are waiting for your implementation are highlighted with # TODO: in diallamka/mw_loader.py.

Note that to use the provided code, you'll need to install the dependencies provided in the requirements.txt. They can be installed easily via pip install -r requirements.txt.

Data background

MultiWOZ 2.2 is a task-oriented conversational dataset labeled with dialogue acts. It contains around 10k conversations between the user and a Cambridge town info centre (system). The dialogues are about certain topics: restaurants, hotels, trains, taxi, tourist attractions, hospital, and police. You can find more details in the dataset repository.

You can write your own dataset loader from the original format (see the dataset) but we recommend using the Huggingface Datasets library version.

This is how the data looks like if you load it using Huggingface Datasets: Each entry in the dataset represents one dialog. The information we are interested in is contained in the field turns, which is a dictionary with the following important keys:

speaker: Role associated with the speaker. It's either 0 (user) or 1 (system).utterance: String representation of the dialogue utterances.dialogue_acts: Structured parse of the system utterances into dialog acts (only in system utterances). It contains slot names and correspondingspan_info(location of the slot in the utterance, which will come in handy later).frames: Present only in user utterances. Structured representation of the user's belief state.

Each of these keys is mapped to a list with labels for the corresponding turns, i.e. turns['speaker'][0] contains information for the speaker of the first turn and turns['speaker'][-1] of the last one.

The dataset contains the train, validation and test splits. Please respect them!

Note that MultiWOZ also contains a database (and you need database queries for your system to work correctly), but we'll address that later.

Dataset class

You need to implement the following properties for the Dataset class:

- It loads the data and process it into individual training examples (containing contexts & responses so far; we'll skip dialogue states and the database for now).

- Each loaded example is a dictionary of the folowing structure:

{ 'context': list[str], # list of utterances preceeding the current utterance 'utterance': str, # the string with the current response 'delex_utterance': str, # the string with the current response which is delexicalized, i.e. slot values are # replaced by corresponding slot names in the text. } - Each dialogue of

nturns will yieldn // 2examples, each with progressively longer context (starting from a context of length 1, up ton-1turns of context). We are modelling only system responses! - It distinguishes between data splits, i.e. it can be parameterized by split type (train, validation, test).

- It can truncate long contexts to

klast utterances, wherekis a parameter of the class. - It contains delexicalized versions of the utterances (where slot values are replaced with placeholders). You can use the data field

dialogue_actsand its fieldsspan_end,span_startfor localizing the parts suitable for delexicalization. Replace those parts with the corresponding slot names fromact_slot_nameenclosed into brackets, e.g.,[name]or[pricerange].

Data Loader

The DataLoader class, as per the template, can do the following:

- It is able to

yielda batch of examples (a simple list with examples of your Dataset) of a batch size given in the constructor. - It will not use the original data order, but will shuffle the examples randomly.

- Yielding a batch repeatedly will never include the same example twice before all the examples have been processed.

You need to additionally implement the following property into DataLoader's batch handling (see _sort_examples_to_buckets_f):

- It will always yield conversations with similar lengths (numbers of tokens,

context+utterance) inside the same batch.

Data loader batch encoding

Machine learning models usually work with numbers and matrices. That is why we also need to convert strings in our batches to integer IDs.

Therefore, inside your data loader class, you'll also need to implement a collate function (collate_fn) that has the following properties:

-

It is able to work with batches coming from your data loader (lists of examples).

-

It uses

GPT2Tokenizerto split all strings into tokens (subwords) and assign them IDs. -

It converts the whole batch to a single dictionary (

output) of the following structure:output = { 'context': list[list[int]], # tokenized context (list of subword ids from all preceding dialogue turns, # system turns prepended with a special `<|system|>` token and user turns with `<|user|>`) # for all batch examples 'utterance': list[list[int]], # tokenized utterances (list of subword ids from the current dialogue turn) # for all batch examples 'delex_utterance': list[list[int]], # tokenized and delexicalized utterances (list of subword ids # from the current dialogue turn) for all batch examples }where

{k : output[k][i] for k in output}should correspond to i-th example of the original input batch.- Do not forget to correctly register all the new special tokens (

<|system|>,<|user|>) into the tokenizer (check outadditional_special_tokensargument of the tokenizer)!

- Do not forget to correctly register all the new special tokens (

Things to submit:

- Your dataset and loader class implementations, both inside

diallama/mw_loader.py. - Output of

hw2/test.pyrun on your data (test set is used by default), ashw2/results_test.txt. Have a look at what the script is doing, that'll help you with your implementation.

3. Finetuning GPT-2 on MultiWOZ

Presented: 7 November, Deadline: 12 December (extended!)

In this assignment, you will be fine-tuning the GPT-2 language model on the MultiWOZ dataset that you prepared. We'll ignore state tracking and database for now, that will come later on. For now, it suffices that the model will give you some reasonable answer that comes from the correct domain, it doesn't necessarily have to be true :-).

Before you start any implementation, make sure you update from upstream! We swapped hw3 & hw4 compared to last year, so things won't make sense othrewise!

Data Loading Final Steps

Modifications for feeding data to the model

You'll need to add a few more steps to your data loader:

You will work with the diallama/mw_loader.py and modify the collate() method in the following way:

- concatenate contexts and delexicalized utterances into a single list

- add the token ID corresponding to the

<|endoftext|>tokens as a delimiter and as the last token - convert the contexts + utterances list of lists into a tensor, pad shorter sequences with zeros

- build 2 boolean masks -- one for the context, one for the utterance -- same size as the main tensor, with

1for context/utterance tokens only (see the example below) - build the attention mask -- this one simply shows

0for padding and1for any valid tokens - convert all masks into tensors

Loader outputs (collated) from HW2 looked like this:

<|ENDOFTEXT|> = 3320

<|USER|> = 3321

<|SYSTEM|> = 3322

contexts = [[3321, 1, 2, 3322, 3, 4, 5, 6, 3321, 7, 8, 9], [3321, 10, 11]]

delex_utterances = [[12, 13 , 14], [15, 16, 17, 18]]

What we need is to make them look like this:

input_ids = [

[[3321, 1, 2, 3322, 3, 4, 5, 6, 3321, 7, 8, 9, 3320, 12, 13, 14, 3320],

[3321, 10, 11, 3320, 15, 16, 17, 18, 3320, 0, 0, 0, 0, 0, 0, 0, 0]]

] # concatenation and padding

context_mask = [

[[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0],

[1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

utterance_mask = [

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0]]

]

attention_mask = [

[[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

[1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0],

]

...

Notice 3320 as the <|endoftext|> token and the zero padding in input_ids. Check the positions of 1 and 0 for all masks with respect to input_ids.

Model & training

For the model training, we have prepared the script hw3/train.py that uses the class Trainer from trainer.py.

Your task will be to fill the TODOs there to implement the training loop and validation step.

You will also need to create an optimizer and scheduler.

-

Load the pre-trained GPT-2 model from the Huggingface Transformers library. More precisely, instantiate the

GPT2LMHeadModelclass and load weights from pretrained model (see.from_pretrained(...)). Use the smallest version of the model ('gpt2'). -

Fine-tune the model on the response generation task. It means that your objective is to minimize negative log-likelihood (NLL) of the training data with respect to your model. Among a couple other things, you need to use the model's

forward()method (by simply callingmodel()as usual in PyTorch/HF) and feed in the proper parameters.-

Feed the whole

input_idstensors into the model, including the context. -

Only train the model to generate the response, not the context, by setting the model's target

labelsproperly (see this note in the docs). Make use of theutterance_mask(orcontext_mask) to produce the correctlabelsinput. -

Don't forget to use the

attention_mask, so you avoid performing attention over padding. -

Feel free to experiment with the optimizer/scheduler and training parameters. A good choice might be the ones preset by Huggingface (AdamW, Linear schedule with warmup).

-

Use the largest batch size you can (the largest where your GPU doesn't run out of memory). It might actually be very small (1-4).

-

Monitor the training and validation loss and use it to determine the hyperparameters (number of training epochs, learning rate, learning rate schedule, ...).

-

First start debugging with very small data, just a few batches (test if the model learns something by checking outputs on the training data).

-

Fix your random seeds so your results are repeatable, and you can tell if you actually changed something (must be done separately for Python and Numpy and PyTorch/Tensorflow!).

-

Note: You may see a lot of use of HuggingFace default Trainer class. We're not doing that, and we're building our own training loop, for two reasons: (1) we need the “feed context + only train to generate responses” function, which wouldn't be straightforward there, (2) we want you to see how it's done on the low level.

Note: Training on the CPU is usually slow, so you'll likely want to use a GPU. You can use Google Colab, which provides GPUs for free for a limited time span. You can also get an account on our in-house AIC student computing cluster (Ondrej will get your accounts created and distribute passwords soon). Before you work on AIC, make sure you read instructions. You can prepare and debug your setup even without a GPU, then only run on the full data once you have access to a GPU.

Note: If you like experimenting, you can replace the GPT-2 model with a similar model trained on conversational data only, e.g., DialoGPT. You can find and browse all pre-trained Huggingface models here.

Decoding

Huggingface provides several options for decoding the outputs of your model. Go through the tutorial and choose a decoding method of your liking (you can go with greedy as the base option). Use it to generate utterances for the first 100 contexts available in the test set.

We have prepared the class GenerationWrapper, which you will need to complete to implement generation from the model.

Optional -- bonus points: Implement batch decoding as well. This is completely optional, if you are interested in the implementation, let us know.

Metrics

Besides the training and validation loss, we want you to report the following measures on the test set:

- token accuracy, i.e. the proportion of correctly predicted utterance token ids (apply

argmaxon the predicted raw logits and compare the result with the ground-truth token ids) - perplexity -- have a look at the loss function you use for training, model perplexity is quite close to that

Things to submit

- Updated loader class implementation (

diallama/mw_loader.py)

- Code for model loading, training, decoding and metrics (

diallama/trainer.py,hw3/train.py)- The code should include your training parameters (you can load them from a JSON/YAML config file if you want)

- Text file (

hw3/multiwoz_outputs.txt) containing the first 100 generated test set responses, each on a separate line.

- Text file (

hw3/multiwoz_scores.txt) containing your token accuracy and perplexity on the whole test set.

4. MultiWOZ 2.2 DB + State

Presented: 21 November, Deadline: 19 December (extended)

Task

This assignment is a continuation of HW2 and depends on it. Your task will be to extend your previously created data loader with belief state and database information.

This will allow us to train a full end-to-end dialogue model with two-step decoding (similar to SOLOIST) using the data provided by the loader you develop here. The model will first produce the current belief state based on dialogue history. The belief state will be used to query the DB, and using DB results, the system response will be generated. Note that this will be done in HW5 & 6, so these depend on HW4.

The implementation includes changes to the MultiWOZDatabase class (database search handling), the Dataset class (including database results and the belief state),

and the DataLoader class (also including database results and the belief state).

Database

The MultiWOZ dataset is task-oriented, and the database is an important part of it. The database stores entities that are available for each domain, along with their attributes. You will use the database results when modelling the conversations, and therefore you need to implement the database query API. However, some domains are specific and their database queries need to be handled in a special way. Also, the MultiWOZ dataset has a few rather annoying quirks. Therefore, we provide for you a partially implemented database class, which already handles things that would be too annoying to deal with.

You still need to implement some things, though:

- Time-based search, which includes conversion of time strings into numbers and getting results before or after a particular time stamp. Specifically, you need to implement a function to convert arbitrary time representations to a 24h-formatted HH:MM string.

3pm -> 15:00

noon -> 12:00

three forty five -> 15:45

etc.

- Matching of search queries to the values in the database (see the code in

diallama/database.py). cThe bits that are waiting for your implementation are highlighted with# TODO:in the code.

Note that to use the provided code, you need to install fuzzywuzzy.

It is listed in the requirements.txt file, so if you followed the installation instructions, you probably have it already.

We recommended to use it for partial matches, e.g., it allows you to match "London" to "London King's Cross" and similar situations.

Dataset class

This is an extension of the class from HW2. You'll need to implement code in the same spots as for HW2, just add some more stuff.

- You should keep all the properties from HW2&3 (example creation from dialogue, data splits, context length...).

- You need to add new

belief_stateanddatabase_resultsfields, so each example will look like this:{ 'context': list[str], # list of utterances preceeding the current utterance 'utterance': str, # the string with the current response 'delex_utterance': str, # the string with the current response which is delexicalized, i.e. slot values are # replaced by corresponding slot names in the text. 'belief_state': dict[str, dict[str, str]], # belief state dictionary, for each domain a separate belief state dictionary, # choose a single slot value if more than one option is available 'database_results': dict[str, int] # dictionary containing the number of matching results per domain } - The

belief_stateis a dictionary that contains mapping of domains to their corresponding belief states (slot-value pairs), i.e.{ 'restaurant': {'pricerange': 'cheap', 'area': 'north', ...}, 'hotel': {'parking': 'yes', ...}, ... }Look into theframesfields of user utterances in the dataset to build the belief state. - The

database_resultsrepresent the counts of database entities matching the current belief state for each domain.{ 'restaurant': 101, 'hotel': 42, ... }You need to distinguish between the cases where 0 entities are matching and where the domain was not mentioned in the belief state and thus was not queried at all! Don't mention the domain in the results in the latter case.

Data Loader

Again, you just need to extend your previously implemented class, so all the previous features (yielding batches, grouping similar lengths, shuffling..) still apply.

And again, you'll need to implement code in the same spots as for HW2, just add a little more.

Data loader batch encoding

Here you need to extend your collate function:

- Again, keep all the properties from HW2 -- use inputs from data loader, tokenize using GPT2Tokenizer.

-

The output of the function should now look like this -- note the new

belief_stateanddatabase_resultsfields:output = { # From HW4 'context': list[list[int]], # tokenized context for all batch examples 'utterance': list[list[int]], # tokenized utterances (current dialogue turn) for all batch examples 'delex_utterance': list[list[int]], # tokenized and delexicalized utterances for all batch examples # From HW3 'input_ids': Tensor[bs, maxlen], # concatenated ids for context and utterance (sep. by special tokens) 'attention_mask': Tensor[bs, maxlen], # mask, 1 for valid input, 0 for padding 'context_mask': Tensor[bs, maxlen], # mask, 1 for context tokens, 0 for others 'utterance_mask': Tensor[bs, maxlen], # mask, 1 for utterance tokens, 0 for others # New -- to be added 'belief_state': list[list[int]], # belief state dictionary serialized into a string representation and prepended with # the `<|belief|>` special token and tokenized (list of subword ids # from the current dialogue turn) for all batch examples 'database_results': list[list[int]], # database result counts serialized into string prepended with the `<|database|>` # special token and tokenized (list of subword ids from the current dialogue turn) # for all batch examples }where

{key : output[key][i] for key in output}should correspond to i-th example of the original input batch.- Do not forget to correctly register all the new special tokens into the tokenizer (check out

additional_special_tokensargument of the tokenizer)! - You can choose your own way of the belief and database results serialization, or you can follow the format of SOLOIST, that is:

- Do not forget to correctly register all the new special tokens into the tokenizer (check out

<|belief|> { restaurant { area : center , pricerange : cheap } attraction { area : south } } <|database|> { restaurant 45 , attraction 23 }

Things to submit:

- Your dataset and loader class implementations, both inside

diallama/mw_loader.py. - Your database interface completion, inside

diallama/database.py - Output of

hw4/test.pyrun on your data (test set is used by default), ashw4/results_test.txt. Have a look at what the script is doing.

5. Two-stage decoding

Presented: 6th December, Deadline: 12th January (extended -- see note on Decoding)

In this assignment, you will continue fine-tuning the GPT-2 language model on the MultiWOZ dataset you prepared.

This time, your model will be enhanced with a belief tracking component and database access using 2-stage decoding.

Data Loading Final Steps

Modifications for feeding data to the model

You'll need to add a few final modifications to your data loader.

You will work with the diallama/mw_loader.py and modify the collate() method in the following way:

- add belief states and database results to the

input_ids(concatenate it between the context and utterance) - add delimiting tokens

- convert the list of lists containing contexts + beliefs + database outputs and utterances into a tensor, pad shorter sequences with zeros

- build 4 boolean masks -- for the context, belief, database, and utterance, respectively -- these will have same size as the main tensor, with

Truefor relevant tokens only (see the example below) - convert all masks into tensors

Loader outputs (collated) from HW3 looked like this:

<|ENDOFTEXT|> = 3320

<|USER|> = 3321

<|SYSTEM|> = 3322

<|BELIEF|> = 3323

<|DB|> = 3324

contexts = [[3321, 1, 2, 3322, 3, 4, 5, 6, 3321, 7, 8, 9], [3321, 10, 11]]

utterances = [[12, 13 , 14], [15, 16, 17, 18]]

delex_utterances = [[12, 1111, 14], [15, 16, 1112, 18]] # some tokens replaced by delex. procedure

beliefs = [[3323, 100, 101], [3323, 102]]

dbs = [[3324, 204], [3324, 207]]

What we need is to make them look like this:

input_ids = [

[[3321, 1, 2, 3322, 3, 4, 5, 6, 3321, 7, 8, 9, 3323, 100, 101, 3324, 204, 3320, 12, 1111, 14, 3320],

[3321, 10, 11, 3323, 102, 3324, 207, 3320, 15, 16, 1112, 18, 3320, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

context_mask = [

[[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

belief_mask = [

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

database_mask = [

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

utterance_mask = [

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0]]

]

...

Notice the special tokens and the zero padding in input_ids. Check the positions of True and False (1 and 0) for both masks with respect to input_ids.

Model & training

For the model training,

you will extend the code in train.py and in the Trainer class in trainer.py that you created in HW3, so the model performs belief tracking correctly.

When training the model, you will train for both belief tracking and response generation simultaneously.

It means that your objective is to minimize negative log-likelihood (NLL) of the training data with respect to your model.

Feed the whole input_ids tensors into your model, but when computing the loss, only the utterance and belief state tokens should be considered (use utterance_mask and belief_mask for the calculation).

For general tips about the training, see the HW3 assignment.

Decoding

Here, you will implement the 2-stage decoding.

Extend your implementation of the GenerationWrapper in the following way:

- Pass the instance of your

MultiWOZDatabaseinto the constructor and save it as a model's property. - Modify the generation process:

- Feed just context and generate from the model, until the

<|db|>token is generated (if you're using HuggingFace'sgenerate(), check outGenerationConfig.eos_token_id). - Parse the generated sequence (i.e. belief state hypothesis) into a structured representation

Dict[Text, Dict[Text, Text]] - Perform a database query using this representation, obtain the results.

- Concatenate the context, belief state hypothesis and database results, all serialized into string the same way as in your

collate()function - Feed it all to the model for the second generation stage, to generate the final response

- Feed just context and generate from the model, until the

Note: The first decoding step was poorly specified (just mentioning <|endoftext|>), hence the deadline extension. Any solution to the first decoding step that gives you the belief state is OK. As an alternative to the approach proposed above, you can (a) decode until <|endoftext|> and throw away the nonsense DB results that the model will produce, (b) insert another <|endoftext|> in the data to stop generation at the given point, or (c) control the generation yourself, without the use of generate().

Metrics

Besides the training and validation loss, we want you to report the following measures on the test set:

- token accuracy, i.e. the proportion of correctly predicted utterance token ids (apply

argmaxon the predicted raw logits and compare the result with the ground-truth token ids). Report the token accuracy for belief tracking and response generation separately as two different metrics. - perplexity -- here, you might find it helpful to figure out the relationship between data perplexity and the objective function used for training.

- Belief F1: on a randomly sampled subset T of 100 test sentences, measure also the F1 score of the generated belief states. You can use the same belief state parser as for the decoding. To measure F1, consider every slot a binary classification problem "is the value correct? yes/no". Then measure F1 for each slot separately and finally take a weighted average (by number of slot occurrences). This means you are computing Macro F1 score.

Things to submit

- Updated loader class implementation (

diallama/mw_loader.py)

- Code for model loading, training, decoding and metrics (

diallama/trainer.py,hw5/train.py)- The code should include your training parameters (you can load them from a JSON/YAML config file if you want)

- Text file (

hw5/multiwoz_outputs.txt) containing the generated responses from test subset T, each on a separate line.

- Text file (

hw5/multiwoz_scores.txt) containing your token accuracies, perplexity and belief F1 on the test set.

6. Evaluation & Consistency task

Presented: 19th December, Deadline: 2 February (extended, see note on the Double Heads model)

Task

In this assignment, you will work with the model trained in HW5 and perform some more experiments. Basically, we will try to answer two questions:

- How well does your model perform?

- Can we improve the model perfrormance by modifying the training process?

Model Evaluation

To evaluate your model's performance, you will report several metrics. Specifically, we want you to report:

- BLEU score,

- dialogue success rate (corpus-based, i.e. you generate each system turn with ground-truth context, then compute success at the end),

- variability of the generated language (number of distinct tokens and conditional bigram entropy).

To be able to compute the metrics, you will need to generate predictions from your model and save them in a machine-readable format, e.g. json. Use a subset of the test set (first 100 dialogues) for generating the predictions.

For the computation of the scores itself, you are free to use any implementation you like. However, the easiest way is to use this evaluation script.

It can be easily installed via pip and allows to measure all the required metrics (and some more).

The script is included in the requirements file in the repository, so if you used the recommended installation, you already have it installed.

For usage instructions, see its GitHub page.

Improving Performance

In this part of the assignment, you will need to modify your model's training process and retrain the model subsequently. The goal of this modification is to improve the belief state tracking performance of your model. To achieve this, we introduce an additional training objective: The model will have to distinguish between the ground-truth belief state and a corrupted version of the belief state. You will need to do the following modifications:

Data loading: belief state corruption

In your Dataset class, add a new field to each generated example. This field will contain a corrupted belief state. You will create the corrupted belief state by replacing each slot value by a different one with a probability p_r = 1/3, i.e. approximately 1/3 of slot values will be changed.

The corrupted belief state will then be encoded in the same way as the ground truth belief state.

When you concatenate the context, belief state and database results in your DataLoader class, you will determine whether this example uses the corrupted state or not based on probability p_c = 1/4, i.e. 1/4 of the training examples will contain the corrupted state.

You will also need to add a binary flag determining if a particular example was corrupted.

To sum up, you should follow this procedure for each example when preparing the data:

- (

Dataset) when the belief state dict is ready, make a corrupted version of it by randomly replacing each slot value with a probability1/3(independently) - (

Dataset) add this corrupted belief state to the example dict - (

DataLoader) decide if the generated example will use the corrupted belief state withp=1/4. - (

DataLoader) if yes, use the corrupted bs when concatenating theinput_ids, use the ground truth otherwise - (

DataLoader) add a binary flag calledcorruptedthat is set toTrueiff you used the corrupted BS.

It's important to do steps 3-5 in the DataLoader so one example can appear corrupted in one epoch but normal in others. Alternatively, all could be done in the DataLoader, but it isn't necessary.

Model architecture

In this assignment, you will implement an additional training objective. Specifically, you will add a classification module that will a perform binary decision to detect belief state consistency (i.e. whether it was corrupted or not). To achieve this, the model has to be slightly modified. You can choose one of the two approaches:

-

Instantiate a slightly modified version of the

GPT2DoubleHeadsModelclass, which is designed with a similar idea in mind, and treat the inputs accordingly. You can check out this gist to see the required modification -- only thenum_classessetting must be changed, but this requires creating a derived class. Additionally, you may want to look intoSequenceSummaryconfiguration and pass them on in the overridden constructor. -

Instantiate the

GPT2LMHeadModelclass as you did previously, and add a custom classification layer manually. This is arguably harder, but may give you more insight into the model internals.

Note: The original version of the assignment did not reflect the subtle change required in GPT2DoubleHeadsModel, hence the deadline extension. The original model assumes you feed it multiple alternatives to choose from (next utterance classification).

Training

Based on the changed architecture, we have 2 classification heads on top of the pretrained transformer model -- one for text generation (language modelling), one for the consistency classification.

- You will train the LM head the same way as before (i.e. with cross-entropy loss using labels derived from

input_ids). - To train the consistency classification head, either gather transformer hidden states from all time steps and sum them up, or take the last hidden state. Then apply linear+softmax layer on top of the sum and minimize the binary cross-entropy between the predicted binary flag and the ground truth (i.e., whether you fed in the true state or the corrupted one).

Combine the losses (LM and consistency) as a weighted sum.

Note that for the corrupted examples, you should not backpropagate the LM loss. That's where we use the corrupted flag.

Again, you have multiple options:

- You do not backpropagate the belief loss at all, i.e., you split the computation of losses for belief state and response.

- You treat the labels for the belief state differently based on the

corruptedflag (mask out ifcorrupted == True).

Option 2 is easier to implement and is actually more efficient as well. Nevertheless, Option 1 can give you more control over the training. The decision is up to you.

Experiments

Measure the same set of metrics as with the previous version of the model (see HW5), plus the metrics mentioned above (use the evaluation script). You don't need to use the additional head or any belief state corruption during the prediction. Use a subset of the test set for the experiments (first 100 dialogues) so you save time with the inference.

What to submit

- Updated MultiWOZ loader class implementation (

diallama/mw_loader.py). - Updated code for model training, decoding and metrics (

diallama/trainer.py; you may use multiple files if you want).- The code should include your training parameters (you can load them from a JSON/YAML config file if you want).

- Text file

hw6/mw_outputs.jsoncontaining your generated test set belief states + responses (on the subset of first 100 dialogues). The ideal format is structured and machine-readable, e.g.json. - Text file (

hw06/multiwoz_metrics.txt) containing the metrics described above (BLEU, success, distinct tokens, conditional bigram entropy) for both model variants -- with and without the state corruption. Compute them on the same subset of the first 100 dialogues in the test set.

7. Bonus 1: Training on DailyDialog

Presented: 19th December, Deadline: 15 September

The first bonus assignment is just making your system work on a different dataset. We will be using the DailyDialog data. Your task is to adapt the loader your created in HW2 so that it works on tihs dataset. You then need to run your model in the version from HW3 on this data -- the two-stage decoding is not applicable here.

Data background

DailyDialog is a chit-chat dialogue dataset labeled with intents and emotions. You can find more details in the paper desccribing the dataset.

Each DailyDialog entry consists of:

dialog: a list of string features.act: a list of classification labels, e.g., question, commisssive, ...emotion: a list of classification labels, e.g., anger, happiness, ...

The lists are of the same length and the order matters (it's the order of the turns in the dialogue, i.e. 5th entry in the act list corresponds to the 5th entry in the dialog list).

The data contains train, validation and test splits.

Needed implementation

Create your own version of the Dataset class (either by duplicating the code, or preferrably by using a derived class)

that is able to load DailyDialog data and process it into individual training examples. You don't need to care about acts or emotions,

the only important thing is contexts and responses.

Each resulting example should be a dictionary of the folowing structure:

{

'context': list[str], # list of utterances preceeding the current utterance

'utterance': str, # the string with the current response

}

Note that in this case, you don't treat user and system turns differently -- you'll create a training example from each utterance (using the preceding utterances as context).

Make any necessary adjustments in your Data Loader and Model classes, so they are able to handle DailyDialog input (these should be minimal).

Training

Train your model on the new data exactly in the same way you did for HW3. You probably don't need as much debugging here, assuming the input looks reasonable, but you may need to change the training parameters.

Measure the same metrics you did for HW3, i.e. token accuracy and perplexity on the DailyDialog test set.

Things to submit

Name your branch hw7 for this submission. Include the following:

- Your loader implementation (as

diallama/dd_loader.py) and updated training code (diallama/trainer.py,diallama/train.py)- The code should include your training parameters (you can load them from a JSON/YAML config file if you want)

- Text file (

hw7/dd_outputs.txt) containing the generated test set responses, each on a separate line.

- Text file (

hw7/dd_scores.txt) containing your token accuracy and perplexity on the validation set.

8. Bonus 2: Report

Presented: 19th December, Deadline: 15th September

The basic idea of the second bonus assignment is that you write a ca. 3-page report (1500 words), detailing your model and the experiments, so it all looks like an academic paper. The purpose of this is to give you some writing training, which might come in handy for your master's thesis or other projects.

Have a look at Ondrej's tips for writing reports here before you start writing!

What to include in the report text

- A short abstract, summarizing the main features of your model and your main results

- An introduction, motivating the model (feel free to use the lectures for inspiration and other works) and potentially summarizing your key results/findings.

- A short related works section (again, feel free to use the lectures) -- a bit more descriptive about the related works, highlighting key differences from your own

- Model description -- describe how the model(s) operate(s) during training and inference

- Experiments -- compare at least two variants of the model. For the MultiWOZ model, it's best to compare a version with and without the consistency auxiliary task. In any case, you can discuss two versions with different hyperparameter values, or compare to a version that only uses a portion of the training data (say 25%). Describe the datasets and your experimental settings in this section.

- Results -- describe the results. Try to draw some conclusions from the scores you got. Also, do a little error analysis -- compare the outputs for at least 10 dialogues (gold contexts + outputs of your two model variants) and summarize your findings. What kinds of errors do your systems make and how frequently? Is there a difference between the systems? Do you think that the automatic scores reflect the models' perfomance well?

- Conclusion (optional) -- just a short summary of your findings. Not necessary if you did it in the introduction already.

The prescribed format for your report is LaTeX, with the ACL Rolling Review templates. You can get the templates directly on Overleaf or download them for offline use.

Things to submit

Name your branch hw8 for this submission. Include this:

- The PDF of your report (under

hw8/report.pdf) - All the LaTeX code, including the templates, figures and references (

hw8/*.*) - The dialogues you used for your error analysis (under

hw8/error_analysis/*.*-- best as either plain text or JSON)

Homework Submission Instructions

All homework assignments will be submitted using a Git repository on MFF GitLab.

We provide an easy recipe to set up your repository below:

Creating the repository

- Log into your MFF gitlab account. Your username and password should be the same as in the CAS, see this.

- You'll have a project repository created for you under the teaching/NPFL099/2023 group. The project name will be the same as your CAS username. If you don't see any repository, it might be the first time you've ever logged on to Gitlab. In that case, Ondřej first needs to run a script that creates the repository for you (please let him know on Slack). In any case, you can explore everything in the base repository. Your own repo will be derived from this one.

- Clone your repository.

- Change into the cloned directory and run

git remote show origin

You should see these two lines:

* remote origin

Fetch URL: git@gitlab.mff.cuni.cz:teaching/NPFL099/2023/your_username.git

Push URL: git@gitlab.mff.cuni.cz:teaching/NPFL099/2023/your_username.git

- Add the base repository (with our code, for everyone) as your

upstream:

git remote add upstream https://gitlab.mff.cuni.cz/teaching/NPFL099/base.git

- You're all set!

Submitting the homework assignment

- Make sure you're on your master branch

git checkout master

- Checkout new branch:

git checkout -b hwX

-

Solve the assignment :)

-

Add new files (if applicable) and commit your changes:

git add hwX/solution.py

git commit -am "commit message"

- Push to your origin remote repository:

git push origin hwX

-

Create a Merge request in the web interface. Make sure you create the merge request into the master branch in your own forked repository (not into the upstream).

Merge requests -> New merge request

- Wait a bit till we check your solution, then enjoy your points :)!

- Once approved, merge your changes into your master branch – you might need them for further homeworks (but feel free to branch out from the previous homework in your next one if we're too slow with checking).

Updating from the base repository

You'll probably need to update from the upstream base repository every once in a while (most probably before you start implementing each assignment). We'll let you know when we make changes to the base repo.

To upgrade from upstream, do the following:

- Make sure you're on your master branch

git checkout master

- Fetch the changes

git fetch upstream master

- Apply the diff

git merge upstream/master master

Automated Tests

You can run some basic sanity checks for homework assignments -- they are included in your repository

(make sure to upgrade from upstream first).

Note that the tests require stuff from requirements.txt to be installed in your Python environment.

The tests assume checking in the current directory, they assume you have the correct branches set up.

For instance, to check hw1, run:

./run_tests.py hw1

By default, this will just check your local files. If you want to check whether you have

your branches set up correctly, use the --check-git parameter.

Note that this will run git checkout hw1 and git pull, so be sure to save any

local changes beforehand!

Always update from upstream before running tests, we're adding checks for new assignments as we go. Some may only be available at the last minute, we're sorry for that!

AIC Cluster Computation Tips

This is just a short primer for the AIC wiki – better read that one, too. But definitely read at least this text before you start working with AIC.

Login

Use the command

ssh LOGIN@aic.ufal.mff.cuni.cz

where LOGIN is your SIS username.

On the head node

When you log on to AIC, you're at the cluster head node. Do not compute here – this just for launching computation jobs, copying files and such. All of your computation jobs will run on one of the CPU/GPU nodes. (You can run the terminal multiplexing program on the head node.)

Workflow

There are two ways to compute on the cluster:

- submitting a batch script containing the commands you wish to run,

- executing commands in an interactive shell.

You should use a batch script for running longer computations. The interactive shell is useful for debugging.

Batch scripts

Use the sbatch command to submit your jobs (i.e. shell scripts) into a queue. For running a python command, simply create a shell script that has one line – your command with all the parameters

you need.

You can either specify the parameters in the script or on the command line.

Here are two equivalent ways of specifying a GPU job with 2 CPU cores, 1 GPU and 16G system RAM (all GPUs have 11G memory):

Using SBATCH directives

- Create a file

job_script.sh:

#!/bin/bash

#SBATCH -J hello_world # name of job

#SBATCH -p gpu # name of partition or queue (if not specified default partition is used)

#SBATCH --cpus-per-task=2 # number of cores/threads per task (default 1)

#SBATCH --gpus=1 # number of GPUs to request (default 0)

#SBATCH --mem=16G # request 16 gigabytes memory (per node, default depends on node)

# here start the actual commands

sleep 5

echo "Hello I am running on cluster!"

- Execute the command:

sbatch job_script.sh

Using command-line parameters

- Create a file

job_script.sh:

#!/bin/bash

sleep 5

echo "Hello I am running on cluster!"

- Execute the command:

sbatch -J hello_world -p gpu -c2 -G1 --mem 16G job_script.sh

Have a look at the AIC wiki or man sbatch for all the command-line parameters.

(Note: long / short flags can be used interchangeably for both approaches.)

Interactive jobs

You can get an interactive console using srun.

The following command will run bash with the same resources as in the previous example:

srun -J hello_world -p gpu -c2 -G1 --mem=16G --pty bash

Important notes

- Always request the resources you'll need! (both for the batch and interactive jobs).

- With interactive jobs, don't forget to

exitthe console after use – you're blocking the GPU and whatever you reserve as long as the console is open! - Don't submit too many jobs at a time (don't overfill the cluster, leave space for others).

- You can't request more than 8 CPU cores or 1 GPUs.

Other commands

- Use

sinfoto list the available queues. - Use

squeue --meorsqueue -u LOGIN(where LOGIN is your username) to check your jobs. - Use

squeueto see every job currently running on the cluster. - Use

scancel JOB_IDto cancel a job.

Tips for efficient development

- terminal multiplexing: byobu (or tmux, screen) allows you to have multiple terminal sessions and keeping everything open even if you lose connection (see a quick primer on byobu).

- SSH connection: mosh is able to reestablish the SSH connection after the connection is interrupted (unstable internet, turning the computer off, etc.).

- file management: you can access the AIC filesystem using SFTP:

sftp://LOGIN@aic.ufal.mff.cuni.cz - development: the SSH plugin for VS Code enables you to code in IDE directly on the cluster.

- Python:

Links

- SLURM tutorial for ÚFAL cluster: https://wiki.ufal.ms.mff.cuni.cz/slurm

- SLURM cheatsheet: https://www.carc.usc.edu/user-information/user-guides/hpc-basics/slurm-cheatsheet

- sbatch manpage: https://manpages.org/sbatch

Exam Question Pool

The exam will have 10 questions from the pool below. Each question counts for 10 points. We reserve the right to make slight alterations or use variants of the same questions. Note that all of them are covered by the lectures, and they cover most of the lecture content. In general, none of them requires you to memorize formulas, but you should know the main ideas and principles. See the Grading tab for details on grading.

Introduction

- What's the difference between task-oriented and non-task-oriented systems?

- Describe the difference between closed-domain, multi-domain, and open-doman systems.

- Describe the difference between user-initiative, mixed-initiative, and system-initiative systems.

- List the main components of a modular task-oriented dialogue system (text/voice-based)

- What is the task (input/output) of speech recognition in a dialogue system?

- What is the task (input/output) of speech synthesis in a dialogue system?

Data & Evaluation

- What are the usual approaches to collecting dialogue data (name at least 2)?

- How does Wizard-of-Oz data collection work?

- What’s the difference between intrinsic and extrinsic evaluation?

- What is the difference between subjective and objective evaluation?

- What are the common options of getting people to evaluate your system?

- What are some evaluation metrics for non-task-oriented systems (chatbots)?

- How would you evaluate NLU (both slots & intents)?

- Explain an NLG evaluation metric of your choice.

- Describe how BLEU works (in principle, exact formulas not needed).

- Show at least 2 examples of subjective (human) evaluation metrics for dialogue systems.

- What is significance testing and what is it good for?

- Assume you have dialogue systems A and B, and A performs better than B in terms of response BLEU on a dataset of 100 dialogues. Describe how you’d test for significance.

- Why do you need to evaluate on a separate test set?

Neural Nets Basics

- What's the difference between classification and regression as a machine learning problem?

- Describe the task of sequence prediction (=autoregressive generation).

- What's the difference between classification and ranking as a machine learning problem?

- What's the difference between sequence labeling and sequence prediction?

- What is an embedding, what units can it relate to, and how can you obtain it?

- What are subwords and why are they useful?

- What's an encoder-decoder model and how does it work?

- How does an attention model work?

- What's the main principle of operation for convolutional networks?

- What's the difference between LSTM/GRU-based and Transformer-based architecture?

- Describe the basics of a Transformer language model.

- What's a pretrained language model?

Training Neural Nets

- Describe the principle of stochastic gradient descent.

- Why is it important to choose an appropriate learning rate?

- Describe an approach for learning rate adjustment of your choice.

- What is dropout, what is it good for and why does it work?

- What’s a variational autoencoder and how does it differ from a “regular” autoencoder?

- What is masked language modeling?

- How do Generative Adversarial Networks work?

- Describe the principle of the pretraining+finetuning approach.

- How does clustering work?

- What is self-supervised training and why is it useful?

- Describe 2 examples of a self-supervised training task.

- Can you apply a pretrained language model for a new task without finetuning it at all?

- How can you finetune a language model without updating all the parameters?

- What's instruction tuning and what is it good for?

Natural Language Understanding

- Design a (sketch) of an NLU neural architecture that joins intent detection and slot tagging.

- Describe language understanding as classification and language understanding as sequence tagging.

- What is delexicalization and why is it helpful in NLU?

- Describe one of the approaches to slot tagging as sequence tagging.

- What is the IOB/BIO format for slot tagging?

- How can you use pretrained language models (e.g. BERT) for NLU?

- How can you combine rules and neural networks in NLU?

- How can an NLU system deal with noisy ASR output? Propose an example solution.

Dialogue State Tracking

- What is the point of dialogue state tracking in a dialogue system?

- What is the difference between dialogue state and belief state?

- What's the difference between a static and a dynamic state tracker?

- What's a partially observable Markov decision process?

- Describe a viable architecture for a belief tracker.

- What is the difference between state trackers as classifiers vs. as candidate rankers?

- Describe the principle of state tracking as span selection.

Dialogue Policies

- Describe the basic reinforcement learning setup (agent, environment, actions, rewards)

- Map the general reinforcement learning setup to a dialogue system situation

- Why is reinforcement learning preferred over supervised learning for training dialogue managers?

- What are V and Q functions in a reinforcement learning scenario?

- What's the difference between actor and critic methods in reinforcement learning?

- Describe a Deep Q Network.

- Describe the REINFORCE approach.

- What’s the main principled difference between Deep Q-Networks and Policy Gradient methods?

- What are actor-critic reinforcement learning methods?

- What’s the difference between on-policy and off-policy optimization?

- Why do you typically need a user simulator to train a reinforcement learning dialogue policy?

- Give an example of possible turn-level or dialogue-level rewards for RL optimization.

- What is a user simulator? What are some common approaches to building one?

Natural Language Generation

- What are the main steps of a traditional NLG pipeline – describe at least 2.

- Describe a chosen handcrafted approach to NLG.

- What are the main problems with vanilla neural NLG systems (base RNN/Transformer)?

- What is a copy mechanism/pointer network?

- What is delexicalization and why is it helpful in NLG?

- Describe a possible neural approach to NLG with an approach to combat hallucination.

- How can you reduce NLG hallucination by altering the training data?

- How can you use pretrained language models in NLG?

- How can you combine templates with neural approaches for NLG?

- What are the typical decoding approaches in NLG? Explain & contrast at least 2.

End-to-end Models

- What are some pros and cons of end-to-end models over traditional modular ones?

- Describe an example structure of an end-to-end dialogue system.

- Describe the Sequicity (2-step decoding) model.

- Describe an end-to-end model based on pretrained language models.

- How would you adapt a pretrained language model for an end-to-end dialogue system?

- What are “soft” database lookups in end-to-end dialogue systems?

- How would you use reinforcement learning to train an end-to-end model?

- Why is it a bad idea to train end-to-end dialogue systems only with reinforcement learning on word level?

- How can you increase consistency (w.r.t. dialogue state) in an end-to-end model?

Chatbots

- What are the three main approaches to building chatbots?

- How does the Turing test work? Does it have any weaknesses?

- What are some techniques rule-based chatbots use to convince their users that they're human-like?

- Describe how a retrieval-based chatbot works.

- Why is plain seq2seq-based architecture for chatbots problematic?

- Describe an example approach to improving diversity or coherence in a seq2seq-based chatbot.

- How can you use a pretrained language model in a chatbot?

- Describe a possible architecture of a hybrid/ensemble chatbot.

- How does retrieval-augmented generation work?

Multimodality

- How does the structure of (traditional) multimodal dialogue systems differ from non-multimodal ones?

- Give an example of 3 alternative input modalities (i.e. not voice/text).

- Give an example of 3 alternative output modalities (i.e. not voice/text).

- How would you build a multimodal end-to-end neural dialogue system (e.g. for visual dialogue)?

- Explain some problems that may occur when a dialogue system talks to two people at once.

- What’s the difference between image classification and object detection?

- How would you build a neural end-to-end image-based dialogue system (consider using pretrained components)?

- What is the task of visual dialogue about?

Linguistics & Ethics

- What are turn taking cues/hints in a dialogue? Name a few examples.

- What is a barge-in?

- What is grounding in dialogue?

- Give some examples of grounding signals in dialogue.

- What is entrainment/alignment/adaptation in dialogue?

- Describe the overgeneralization/overconfidence problem in data-driven NLP models.

- Describe the demographic bias problem in data-driven NLP models.

- Give an example of a user safety concern in dialogue systems.

- What's the problem with training neural models on private data?

Course Grading

To pass this course, you will need to:

- Take an exam (a written test covering important lecture content).

- Do lab homeworks (implementing an end-to-end dialogue system + other tasks).

Exam test

- There will be a written exam test at the end of tihe semester.

- There will be 10 questions, we expect at least 2-3 sentences as an answer, with a maximum of 10 points per question.

- You may get bonus points for correct and more detailed answers.

- A list of possible exam questions is available on the website.

- To pass the course, you need to get at least 50% of the total points from the test.

- If needed, there will be exam dates in the summer.

Homework assignments

- There will be 6 homework assignments, introduced every other week, plus some bonus assignments.

- You will submit the homework assignments into your private Gitlab repository.

- For each assignment, you will get a maximum of 10 points.

- You may get a bonus point for nice code.

- All assignments will have a fixed deadline (typically 2-3 weeks).

- You can ask for a deadline extension if you have a good reason, such as illness.

- If you submit the assignment after the deadline, you will get:

- up to 50% of the maximum points if it is less than 2 weeks after the deadline;

- 0 points if it is more than 2 weeks after the deadline.

- Any bonus points you get will not be lowered.

- Note that most assignments depend on each other! That means that if you miss a deadline, you still might need to do an assignment without points in order to score on later assignments.

- Once we check the submitted assignments, you will see the points you got and the comments from us on Gitlab, in a comment on your merge request.

- Please bear with us for the checking, it's harder than it looks.

- If your unsure about your total points, feel free to ask.

- You can take the exam even if you don't reach 40 homework assignment points, but you need to get at least 40 points to get the final grade.

Grading

The final grade for the course will be a combination of your exam score and your homework assignment score, weighted 3:1 (i.e. the exam accounts for 75% of the grade, the assignments for 25%).

Grading:

- Grade 1: >=87% of the weighted combination

- Grade 2: >=74% of the weighted combination

- Grade 3: >=60% of the weighted combination

- An overall score of less than 60% means you did not pass.

In any case, you need >50% of points from the test and 40+ points (i.e. 66%) from the homeworks to pass. If you get less than the minimum from either, even if you get more than 60% overall, you will not pass.

No cheating

- Cheating is strictly prohibited and any student found cheating will be punished. The punishment can involve failing the whole course, or, in grave cases, being expelled from the faculty.

- Discussing homework assignments with your classmates is OK. Sharing code is not OK (unless explicitly allowed); by default, you must complete the assignments yourself.

- All students involved in cheating will be punished. E.g. if you share your assignment with a friend, both you and your friend will be punished.

Recommended Reading

You should be able to pass the course just by following the lectures, but here are some hints on further reading. There's nothing ideal on the topic as this is a very active research area, but some of these should give you a broader overview.

Recommended, though slightly outdated:

- Gao et al.: Neural Approaches to Conversational AI. arXiv:1809.08267

Recommended, but might be a bit too brief:

- Jurafsky & Martin: Speech & Language processing. 3rd ed. draft (chapter 15).

- this one is really brief, but a good starting point

- McTear: Conversational AI: Dialogue Systems, Conversational Agents, and Chatbots. Morgan & Claypool 2021.

- this one is most up-to-date, it is available as an e-book from our library):

Further reading:

- McTear et al.: The Conversational Interface: Talking to Smart Devices. Springer 2016.

- good, detailed, but slightly outdated

- Jokinen & McTear: Spoken dialogue systems. Morgan & Claypool 2010.

- good but outdated, some systems very specific to particular research projects

- Rieser & Lemon: Reinforcement learning for adaptive dialogue systems. Springer 2011.

- advanced, slightly outdated, project-specific

- Lemon & Pietquin: Data-Driven Methods for Adaptive Spoken Dialogue Systems. Springer 2012.

- ditto

- Skantze: Error Handling in Spoken Dialogue Systems. PhD Thesis 2007, Chap. 2.

- good introduction into dialogue systems in general, albeit dated

- current papers from the field (see links on lecture slides)