Institute of Formal and Applied Linguistics

Charles University, Czech Republic

Faculty of Mathematics and Physics

Large Language Models

Goals of the course:

- Explain how the models work

- Teach basic usage of the models

- Help students critically assess what you read about them

- Encourage thinking about the broader context of using the models

Syllabus from SIS:

- Basics of neural networks for language modeling

- Language model typology

- Data acquisition and curation, downstream tasks

- Training (self-supervised learning, reinforcement learning with human feedback)

- Finetuning & Inference

- Multilinguality and cross-lingual transfer

- Large Language Model Applications (e.g., conversational systems, robotics, code generation)

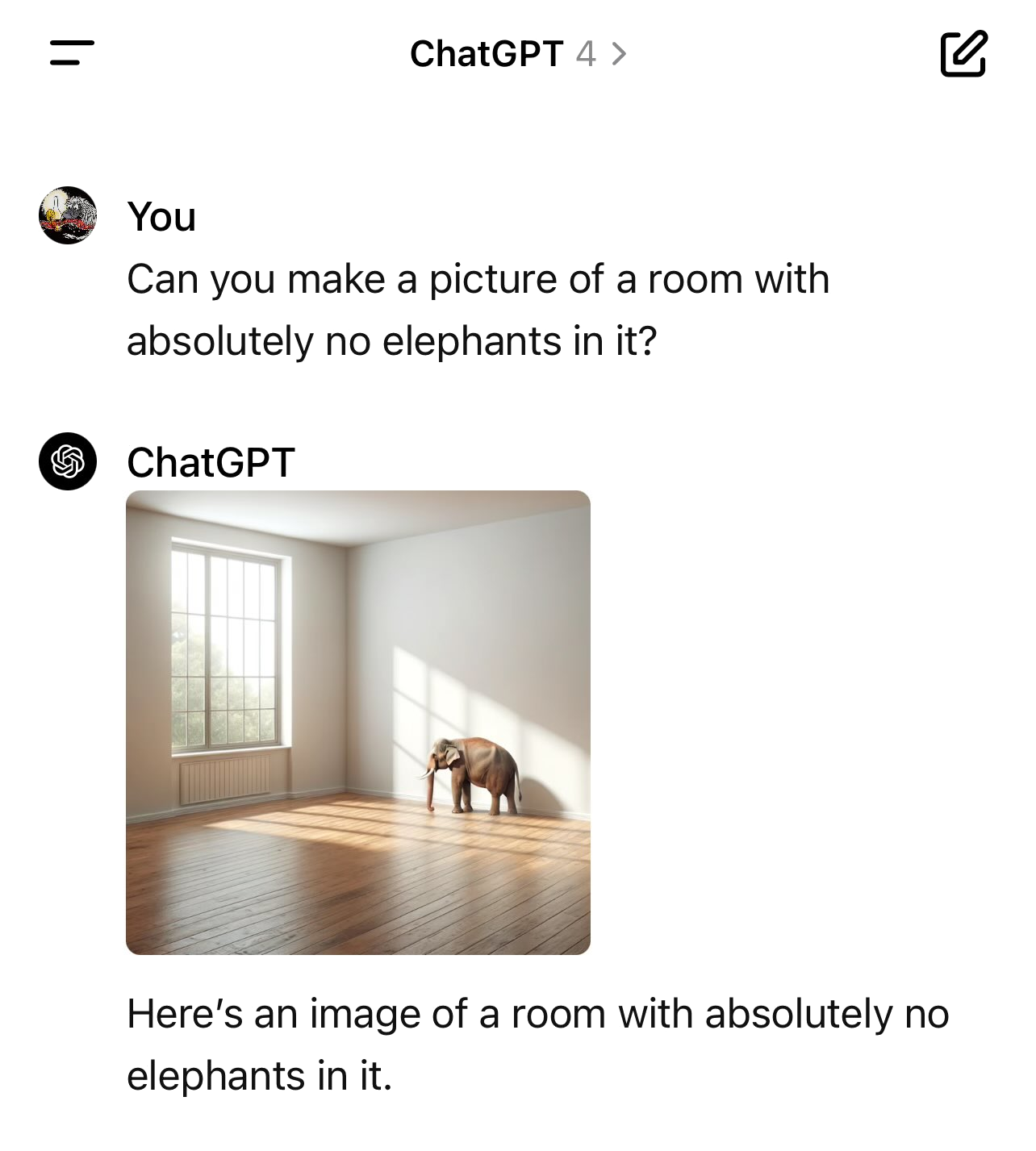

- Multimodality (CLIP, diffusion models)

- Societal impacts

- Interpretability

The course is part of the inter-university programme prg.ai Minor.

About

SIS code: NPFL140

Semester: summer

E-credits: 3

Examination: 0/2 C

Guarantors: Jindřich Helcl, Jindřich Libovický

Timespace Coordinates

The course is held on Mondays at 12:20 in S9.

Lectures

1. Introductory notes and discussion on large language models Slides

2. The Transformer architecture Slides Notes Recording

3. Data and Evaluation, Project Proposals Project Proposals Notes

4. LLM Inference Slides Code Recording

5. LLM Training Slides Project teams Recording

6. Hands-on session (Generating weather reports) Exercise

7. How to get the model to do what you want: A mini-conference Evaluation Dialogue, Data-to-text Simultaneous MT Grammar vs. LLM Structured output Machine translation RAG & CoT Recording

8. Multilinguality, tokenization, machine translation Slides Recording

9. Reading News Stories on LLMs Slides

License

Unless otherwise stated, teaching materials for this course are available under CC BY-SA 4.0.

1. Introductory notes and discussion on large language models

Feb 17 Slides

Instructor: Jindřich Helcl

Covered topics: aims of the course, passing requirements. We discussed what are (large) language models, what are they for, what are their benefits and downsides. We briefly talk about the Transformer architecture. We concluded with a rough analysis of ChatGPT performance in different languages.

2. The Transformer architecture

Instructor: Jindřich Libovický

Learning objectives. After the lecture you should be able to...

-

Explain the building blocks of the Transformer architecture to a non-technical person.

-

Describe the Transformer architecture using equations, especially the self-attention block.

-

Implement the Transformer architecture (in PyTorch or another framework that does automated differentiation).

Additional materials.

-

Transformers explained by AI Coffee Break with Letitia (20 min)

-

Let's build GPT: from scratch, in code, spelled out by Andrej Karpathy (2 hours)

-

The Illustrated Transformer by Jay Alammar

3. Data and Evaluation, Project Proposals

Mar 3 Project Proposals Notes Team Coordination Team Registration

Instructor: Jindřich Helcl

Learning objectives. After the class you should be able to...

-

Know about different NLP tasks that we can solve with LLMs.

-

Look for suitable datasets applicable to the task (both for fine-tuning and evaluation).

-

Describe (and be aware of) the different characteristics of the relevant data (size, structure, origin, etc.).

-

Design experiments in terms of selecting the right baseline model, data and metric (and have some idea about what the result should be).

Team coordination document We suggest that teams who are looking for members as well as individuals looking for a team use the following shared document for coordination. You can look for a team and advertise your team to others.

Team registration form. The registration form for teams is available here

Individuals registration For those not successful in forming or finding a team, we will open a individual registration form later and we will try to assign you to some existing team.

4. LLM Inference

Instructor: Zdeněk Kasner

Learning objectives. After the class you should be able to...

- Understand how to generate text with a Transformer-based language model.

- Explain differences between decoding algorithms and the role of decoding parameters.

- Choose a suitable LLM for your task.

- Run a LLM locally on your computer or computational cluster.

Additional materials.

- Deep Dive into LLMs like ChatGPT by Andrej Karpathy (3.5 hours)

- HuggingFace Models, the repository of open (L)LMs

- Awesome LLM, a curated list of resources for LLMs

- LLM Visualization, a 3D visualization of transformer inference

- How to generate text, the Huggingface decoding algorithms overview

- Generation with LLMs, common pitfalls when generating text with LLMs

5. LLM Training

Mar 17 Slides Project teams Recording

Instructor: Ondřej Dušek

Learning objectives. After the class you should be able to...

- Give a high-level description of how neural networks, and transformers in particular are trained.

- Understand self-supervised training and pretraining.

- Explain the differences between various advanced training techniques used in LLMs today, compared to basic next-word prediction.

Additional materials.

- How Neural Networks are Trained, a detailed overview of NN training

- Tensorflow Playground, interactive website for NN training (train your own teeny tiny NN)

- Illustrating Reinforcement Learning from Human Feedback (RLHF), a Huggingface blogpost

- ChatGPT: This AI has a JAILBREAK?! (Unbelievable AI Progress), a Yannic Kilcher video that includes a quick explanation of RLHF (don't mind the hype, the video was from the time when ChatGPT was really new)

- Direct Preference Optimization, a video explanation on AI Coffee Break with Letitia

- Explainer: What's R1 & Everything else, a quick blogpost on DeepSeek-R1 and reasoning models (i.e. test-time compute)

- Scaling Test-time Compute with Open Models, a Huggingface blogpost on the same topic

- Phi-4 Technical Report with details on relatively heavy use of synthetic data

6. Hands-on session (Generating weather reports)

Mar 24 Exercise Shared Report

Instructor: Jindřich Helcl

Learning objectives. After the class, you should be able to...

- Run inference with the Ollama API.

- Describe the influence of different decoding parameters on the nature of the outputs.

- Choose an appropriate prompting technique for different models.

Additional materials.

7. How to get the model to do what you want: A mini-conference

Mar 31 Evaluation Dialogue, Data-to-text Simultaneous MT Grammar vs. LLM Structured output Machine translation RAG & CoT Recording

The class provided an overview of how researchers at ÚFAL use LLMs in their work:

- Patricia Schmidtová: How to tell if the LLM is actually doing what you want?

- Ondřej Dušek: LLMs for Dialogue & Data-to-text

- Dominik Macháček: Simultaneous Speech Translation

- Gianluca Vico: Grammar VS LLMs

- Zdeněk Kasner: Structured outputs and their applications

- Josef Jon, Miroslav Hrabal: Machine Translation using LLMs

- Rudolf Rosa: Retrieval augmented generation, Chain-of-thought prompting

8. Multilinguality, tokenization, machine translation

Instructor: Jindřich Libovický

Learning objectives. After the class, you should be able to...

- Name benefits of multilingual language models and cross-lingual transfer.

- Pick the multilingual model suitable for a specific language based on training data, similar languages covered and tokenizer properties.

- Describe the most common tokenization algorithms, list their advantages and disadvantage.

- Use LLMs for machine translation including evaluation.

Additional materials.

- Andrej Karpathy: Let's build the GPT Tokenizer , 2.2 hour video

- The Tokenizer Playground, a demo of the tokenizers of popular models running in a browswer

- Huggingface blog: https://huggingface.co/spaces/huggingface/number-tokenization-blog

9. Reading News Stories on LLMs

Apr 14 Slides

Project work

You will work on a team project during the semester.

Reading assignments

You will be asked at least once to read a paper before the class.

Final written test

You need to take part in a final written test that will not be graded.