Institute of Formal and Applied Linguistics

Charles University, Czech Republic

Faculty of Mathematics and Physics

NPFL104 – Machine Learning Methods

The course is focused on practical exercises with applying machine learning techniques to real data. Students are expected to be familiar with basic machine learning concepts.

About

SIS code:

NPFL104

Semester: summer

E-credits: 5

Examination: 1/2 credit+exam

Teachers

Whenever you have a question or need some help (and Googling does not work), contact us as soon as possible! Please always e-mail both of us.

Classes

- the classes combine lectures and practicals

- in 2018/2019, the classes are held on T.B.A.

Requirements

To pass the course, you will need to submit homework assignments and do a written test. See Grading for more details.

License

Unless otherwise stated, teaching materials for this course are available under CC BY-SA 4.0.

Classes

2. Selected classification techniques hw_my_classif

3. Classification dataset preparation hw_my_dataset

4. Selected classification techniques, cont.

6. Scikit-learn hw_scikit_classif

7. Diagnostics & kernel methods cont. Slides (Diagnostics, Kernels Illustrated) Extra slides (Ng) Extra slides (Cohen) Reading: Bias-Variance Proof hw_gridsearch

8. Regression hw_my_regression

9. Feature engineering, Regularization Slides (Feature engineering) hw_scikit_regression

10. Clustering hw_my_clustering

12. Overall hw evaluation, solution highlights

Legend:

Badge@: primary,,Slides

Badge@: danger,,Video

Badge@: warning,,Homework assignment

Badge@: success,,Additional reading

Badge@: info,,Test questions

1. Introduction

Feb 20

Course aim

To provide students with an intensive practical experience on applying Machine Learning techniques on real data.

Course strategy

Until (time) exhausted, loop as follows:

- Do-It-Yourself step - develop your own toy implementations of ML basic techniques (in Python), to understand the core concepts,

- Do-It-Well step - learn to use existing Python libraries and routinize their application on a number of example datasets

Overview of scheduled topics

Three blocks of classes corresponding to basic ML tasks...

- classification

- regression

- clustering

... interleaved with classes on common topics such as

- feature engineering

- ML diagnostics.

Today - a quick intro to python (if needed)

Let's borrow some googled materials:

- Introduction to Python by Nowell Strite

- Introduction to Python by "Amiable Indian"

- Python: Introduction for Absolute Beginners by Bob Dowling

- The official Python Tutorial

- Came from Perl? Perl-Python phrasebook might be interesting for you.

- Practice coding basics in Python:

- simple Python code tasks with on-line evaluation at CodingBat

2. Selected classification techniques

Feb 27

Some basic classification methods

We'll recall the task of classification, discuss a few selected basic classification techniques, and discuss their pros and cons:

- decision trees

- perceptron

- K nearest neighbors

- Naive Bayes

3. Classification dataset preparation

Mar 6 Selecting topics for a homework task focused on creating a new classification dataset. hw_my_dataset

4. Selected classification techniques, cont.

Mar 13

Slightly more advanced classification topics

-

some more classification methods in detail:

- logistic regression

- support vector machines

-

multiclass classification

- native binary vs. native multiclass setups

- conversion strategies (one against one, one against all)

5. Kernels

Mar 20

Kernel methods in classification

- warmup: comparing 0-1 loss, logistic loss, and hinge loss

- the kernel trick

- polynomial and RBF kernel functions

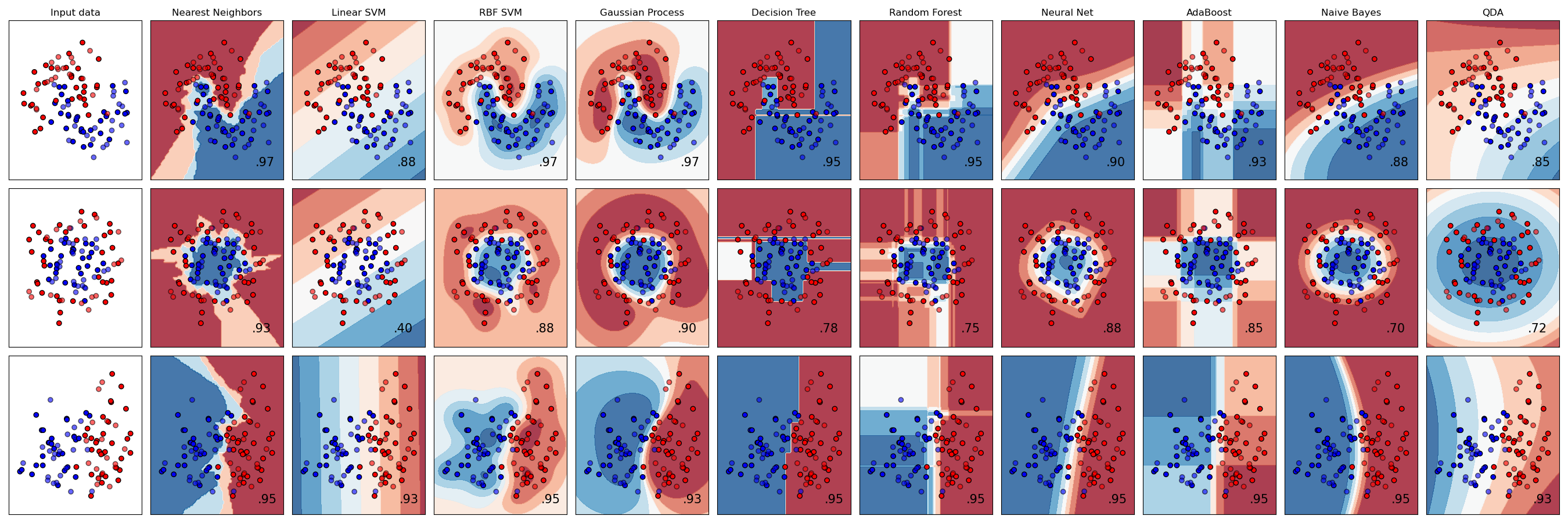

6. Scikit-learn

Mar 27

Introduction to Scikit-learn

- Classifier comparison in Scikit-learn

-

for the scikit-learn common estimator API see slide 47 in Introduction to Machine Learning with Scikitlearn

7. Diagnostics & kernel methods cont.

April 3

Slides (Diagnostics, Kernels Illustrated) Extra slides (Ng) Extra slides (Cohen) Reading: Bias-Variance Proof

- Visualizing

- Bias-Variance, Overfitting-Underfitting

- Search vs. Modelling Error

- Error Analysis, Ablative Analysis

- Other nice sources:

- slides by Cohen on bias-variance decomposition

- slides by Ng on diagnosing in general

- scikit cheat sheet listing which ML methods are available in scikit

8. Regression

April 10

- locally weighted regression (simple interpolation, K-nn averaging, weighting by a kernel function)

- setup of the regression task in machine learning

- linear regression in detail

- basis functions for handling non-linear dependencies

- probabilistic interpretation of the least squares optimization criterion (consequence of central limit theorem)

- optimization by stochastic gradient descent

9. Feature engineering, Regularization

April 17

- additional reading:

- p-norms for Lp regularization

- What is the difference between L1 and L2 regularization?,

- What is regularization in plain English

10. Clustering

April 24

- clustering vs. classification

- scikit cheat sheet as a very simple guide

- K-means

Clustering evaluation:

Choosing the number of clusters:

- Silhouette analysis: scikit demo

- Elbow method: here

- ... both are kind of heuristical.

11. Clustering, cont.

May 15

Clustering, cont.

Mixture of Gaussians and EM

Hierarchical clustering

- Agglomerative, divisive; dendrogram.

- Single linkage, complete linkage, average linkage, centroid method.

- a tutorial for SciPy hierarchical clustering

12. Overall hw evaluation, solution highlights

May 22

Assignment

1. hw_python

Create your git repository at UFAL's redmine, follow these instructions, just replace npfl092 with npfl104 and 2017 with 2019

Continue practicing your knowledge of Python:

-

Implement at least 10 tasks from CodingBat (only from categories Warmup-2, List-2, or String-2).

-

Implement a simple class, anything with at least two methods and some data attributes (really anything).

For all tasks, add short testing code snippets into the respective source codes (e.g. count_evens.py should contain your implementation of count_evens function, as well as a short test checking the function's answer on at least one problem instance). Run them from a single Makefile: after typing 'make' we should see confirmations of correct functionality.

Submit the solutions into hw/python in your git repository for this course.

Deadline: Mar 6 (23:59 Prague time, which applies for all deadlines below too)

2. hw_my_classif

Homework my-classifiers:

-

Do-It-Yourself style.

-

Implement three classifiers in Python and evaluate their performance on given datasets.

-

Choose three classification techniques out of the following four: perceptron, Naive Bayes, KNN, decision trees.

-

You can use any existing libraries e.g. for data loading/storage or for other manipulation with data (including e.g. one-hot conversions), with the only exception: the machine learning core of each classifier (i.e., the training and prediction pieces of the code) must be written solely by yourself.

-

Apply the three classifiers on the following dataset and measure their accuracy (=percentage of correctly predicted instances):

-

a synthetic dataset (colored objects), a perfectly separble version and a version with added noice: artificial_objects.tgz

-

adult income prediction dataset from the Machine Learning Repository at UCI

-

Organize the execution of the experiments using a Makefile; 'make download' downloads the data files from the URLs given above; typing 'make perc' should run training and evaluation of perceptron (if it's in your selection) for both datasets and print out the final accuracy for both (while other output info is stored in perc.log file); 'make nb', 'make knn', make 'dt' should work analogously (three of four are enough), 'make all' should call data download and all your three classification targets.

After finishing the task, store it into hw/my-classifiers in your git repository for this course.

Please double-check that 'make all' works in a fresh git clone in the deafult SU1 environment (you can access the SU1 computers remotely by ssh).

Deadline: Mar 13

3. hw_my_dataset

- Please prepare the dataset(s) of yours in two-person teams (as agreed in the class) and commit it to the Redmine git repository of one person of your team.

In your repository, create this structure of directories:

hw/my-dataset/ob-sample/ # one subdir per dataset variation

hw/my-dataset/ob-sample-with-derived-features/ # another variation

-

The name of your dataset should be the one listed in the ML Methods Datasets 2019 Google Sheet.

- Choose the name of your dataset to be a valid identifier, i.e. no spaces, preferably only small letters.

-

Within each dataset directory (

ob-sampleorob-sample-with-derived-featuresin the example here), provide these files:-

train.txt.gz,test.txt.gz-

[compulsory] the training and test data

Both files have to be gzipped plaintexts, comma-delimited, with unix line breaks.

The class label has to be in the last field of each line. (Use class labels relevant for your dataset, i.e. symbolic names such as Good, Bad. Avoid meaningless numbers.)

...this means that your dataset already has to be converted to reasonable features. If there are more sensible ways to featurize your dataset, feel free to create more dataset variations.

If your data is over ~7MB, please commit a Makefile, not the actual data. Running

makein your dataset directory should download/obtain these files from somewhere.

-

-

header.txt- [optional] comma-delimited one-line file with column labels. Concatenating header and train/text would create one table with column labels.

-

README- [compulsory] a brief description of the dataset, its source URL, your processing etc.

-

corrplot.pdforhistogram-of-labels.pdfor similar-

[compulsory] Choose one way of visualizing the data to give a quick overview of it.

-

Some examples on visualization in Python will be added in the coming days.

-

-

Deadline: Mar 25

4. hw_scikit_classif

-

The "do-it-well" version of

my-classifiershomework -

Apply scikit-learn classification modules on all the datasets collected by you and your colleagues (each student has to do this homework on his own, on all datasets).

- All the datasets will gradually become available in the shared repository:

- See the web for the cloning URL: https://redmine.ms.mff.cuni.cz/projects/student/repository/2019-npfl104-shared (you have to be logged in to redmine)

- Each dataset will be in a subdirectory

data/DATASET-NAME.

- Choose at least 4 different classifiers from those offered in Scikit-learn (there are many of them, e.g. svm.SVC, KNeighborsClassifier, BernoulliNB, tree.DecisionTreeClassifier, LogisticRegression ...).

- You can use sklearn modules also for preprocessing, e.g. for loading matrices of floats (numpy.loadtxt or pandas) or for replacing categorial features by numbers (from sklearn.feature_extraction import DictVectorizer)

- All the datasets will gradually become available in the shared repository:

-

You need to submit both your script that runs it and the scores that you achieved:

- Your script should go to your standard repository, into

hw/scikit-classifiers.- Make sure that running

makein that directory will get the data and run all the classifiers.

- Make sure that running

- Report the scores of each of your runs into the file

CLASSIFICATION_RESULTS.txtin the shared repository.- The format of the file is described in the file itself.

- Make sure to run the validation script:

check-classification-results.py < CLASSIFICATION_RESULTS.txtbefore pushing the file.

- Your script should go to your standard repository, into

Deadline: April 24

5. hw_gridsearch

homework classification-gridsearch

Use the dataset PAMAP-Easy from the shared data repository:

- See the web for the cloning URL: https://redmine.ms.mff.cuni.cz/projects/student/repository/2019-npfl104-shared (you have to be logged in to redmine)

For PAMAP-Easy as divided into train+test:

- Cross-validate on train to choose between linear, poly and RBF.

- Create the heatmap for RBF (i.e. plot score for all values of C and gamma).

- Use the

GridSearchCVto find the best C and gamma (i.e. find the best without plotting anything). - NEVER USE THE

test.txt.gzFOR THE GRIDSEARCH. - Enter the accuracy of the best setting on

test.txt.gztoCLASSIFICATION_RESULTS.txt, mention C and gamma in the comment.

Deadline: April 24

6. hw_my_regression

-

homework

my-regression -

do-it-yourself style task: implement any regression technique (e.g. least squares by stochastic gradient descent) and apply it on the following datasets:

-

artificial data y(x) = 2*x + N(0,1): artificial_2x_train.tsv artificial_2x_test.tsv

-

prices of flats in Prague, given their area (m2), type of construction, type of ownership, status, floor, equipment, cellar, and balcony: pragueestateprices_train.tsv pragueestateprices_test.tsv

-

organize the execution of the experiment using a Makefile, typing

make allshould train and evaluate (e.g. via mean square error) models for both datasets -

store your solution into

hw/my-regressiondirectory in the git repository

Deadline: May 1

7. hw_scikit_regression

-

The Do-it-well step

-

Apply at least three scikit-learn regression modules on the datasets from the previous class on regression. You can use e.g. modules for Generalized Linear Models, Support Vector regressors, KNN regressors, Decision Tree regressors, or any other.

-

make your solution (i.e. training and evaluation (e.g. via mean square error) on both datasets) runnable just by typing 'make'

-

submit your solution into into

hw/scikit-regression/in your git repository.

Deadline: May 19

8. hw_my_clustering

Homework my-clustering

-

Do-It-Yourself style

-

implement the K-Means algorithm in a Python script that reads data in the PAMAP-easy format and clusters the data. At the end, the script should print a summary table like the one in this example.

-

Dataset for the homework: PAMAP-Easy from the shared data repository:

- See the web for the cloning URL: https://redmine.ms.mff.cuni.cz/projects/student/repository/2019-npfl104-shared (you have to be logged in to redmine)

-

Commit your script and the Makefile into the

my-clustering/directory in the usual place. -

As usual, please double-check that typing

makein this directory in a fresh clone works in the default SU1 environment.

Deadline: May 8

The pool of final written test questions

Questions to the topic Classification

-

Describe the task of classification. (1 point)

-

Describe linear classification models. (1 point)

-

Explain how perceptron classifiers work. (2 points)

-

Explain how Naive Bayes works. (2 points)

-

Explain how KNN works. How would you choose the value of K? (3 points)

-

Explain how decision trees work. Give examples of stopping criteria. (3 points)

-

Explain how SVM works. (3 points)

-

Compare pros and cons of any 2 classification methods which you choose out of the following 6 methods: perceptron, Naive Bayes, KNN, decision trees, SVM, Logistic Regression (3 points)

-

Explain the notion of separation boundary. (1 point)

-

What does it mean that a classification dataset is linearly separable. (1 point)

-

Assume there are two classes of points in 2D in the training data: A = {(0,0),(1,1)} and B = {(3,1),(2,3),(2,4)}. Could you sketch separation boundaries that would be found by (a) SVM, (b) perceptron, (c) 1-NN? (3 points)

-

How would you use a binary classifier for a multiclass classification task? (2 points)

-

Give two examples of "native multiclass" classifiers and two examples of "native binary" classifiers. (3 points)

-

What would you do if you are supposed to solve a classification task whose separation boundary is clearly non-linear (e.g. you know that it's ball-shaped)? (2 points)

Questions to the topic Regression

- Describe the task of regression. (1 point)

- Describe the model of linear regression. (1 point)

- Describe any optimization criterion that could be used for estimating parameters of a linear regression model. (2 points)

- Describe any technique (in the sense of a search algorithm) that could be used for estimating parameters of a linear regression model if the least squares optimization criterion is used. (2 points)

- What would you do if the dependence of the predicted variable is clearly non-linear w.r.t. the input features? (2 points)

- Describe situations in which using the least squares criterion might be misleading (2 points)

- What are the main similarities and differences between the tasks of regression and classification? Is logistic regression a regression technique or a classification technique? (3 points)

Questions to the topic Clustering

- Describe how K-means works. (2 points)

- How would you initialize the centroid vectors at the beginning of K-Means. (1 point)

- Describe how Mixture of Gaussians works. (3 points)

- Describe pros and cons of K-Means in comparison to Mixture of Gaussians. (3 points)

- Give an example of a hierarchical clustering method, and sketch a set of datapoints for which it would lead to a better clustering result compared to that of KNN or MoG. (2 points)

- What is a dendrogram (in the context of clustering)? How can it be created? (2 points)

Questions to the topic Evaluation and diagnostics

- Explain what is learning curve and how it can be used. (3 points)

- Why does it make sense to evaluate performance both on the training data and on held-out data? (2 points)

- What is ablative analysis? (1 point)

- Illustrate underfit/overfit problems in regression by fitting a polynomial function to a sequence of points in 2D. (2 points)

- Illustrate underfit/overfit problems in classification by modeling two (partially overlapping) classes of points in 2D. (2 points)

- What problem is typically signalled by test error being much higher that training error? (2 points)

- How would you compare the quality of two classification models used for a given task? (1 point)

- How would you compare the quality of two regression models used for a given task? (3 points)

- How would you compare the quality of two clustering models used for a given task, if some gold classification data exist? (3 points)

- How would you compare the quality of two clustering models used for a given task, if no gold classification data exist? (3 points)

- What would you use as a baseline in a classification task? (1 points)

- What would you do when your Naive Bayes classifier runs into troubles because of sharp zero probability estimates? (2 points)

- What would you do if it turns out that you decision tree classifier clearly overfits the given training data? (2 points)

Questions to the topic Feature engineering and regularization

- Explain the main idea of the kernel trick, give an example. (2 points)

- Explain what is regularization in ML used for, give an example of a regularization term. (3 points)

- What are pros and cons of selecting features by a simple greedy search such as gradual growing the feature set from an empty set (forward selection) or gradual removing features from the full set (backward selection)? (2 points)

- What is the main difference between regularization and feature selection? (3 points)

- What is the typical way how a regularization factor is employed during training? (2 points)

- If you were supposed to reduce the number of features by selecting the most promising ones, how would you do the selection? (2 points)

- What would you do if your ML algorithm accepts only discrete features but some of your features come from a continuous domain (are real-valued)? (2 points)

- What would you do if your ML algorithm accepts only binary features but some of your features can have multiple values? (1 point)

- Give an example of a situation in which feature rescaling is needed, and an example of a rescaling method. (2 points)

- What is feature standardization? (2 points)

Homework assignments

- There will be 8-12 homework assignments.

- For most assignments, you will get points,

up to a given maximum

(the maximum is specified with each assignment).

- If your submission is especially good, you can get extra points (up to +10% of the maximum).

- Most assignments will have a fixed deadline (usually in 2 weeks).

- If you submit the assignment after the deadline, you will get:

- up to 50% of the maximum points if it is less than 2 weeks after the deadline;

- 0 points if it is more than 2 weeks after the deadline.

- Once we check the submitted assignments, you will see the points you got and

the comments from us in:

Studijní mezivýsledky

module in the Czech version of SIS

Studijní mezivýsledky

module in the Czech version of SIS Study group roster

module in the English version of SIS

Study group roster

module in the English version of SIS

Test

- There will be a written test at the end of the semester.

- To pass the course, you need to get at least 50% of the total points from the test.

- You can find a sample of test questions on the website.

Grading

Your grade is based on the average of your performance; the test and the homework assignments are weighted 1:1.

- ≥ 90%

- ≥ 70%

- ≥ 50%

- < 50%

For example, if you get 600 out of 1000 points for homework assignments (60%) and 36 out of 40 points for the test (90%), your total performance is 75% and you get a 2.

No cheating

- Cheating is strictly prohibited and any student found cheating will be punished. The punishment can involve failing the whole course, or, in grave cases, being expelled from the faculty.

- Discussing homework assignments with your classmates is OK. Sharing code is not OK (unless explicitly allowed); by default, you must complete the assignments yourself.

- All students involved in cheating will be punished. E.g. if you share your assignment with a friend, both you and your friend will be punished.